What is LVM?

In Linux, Logical Volume Manager is a device mapper framework that provides logical volume management for the Linux kernel. Most modern Linux distributions are LVM-aware to the point of being able to have their root file systems on a logical volume.

Let’s understand...

What is elasticity?

Elasticity is defined as the degree to which a system is able to adapt to workload changes by provisioning and de-provisioning resources in an autonomic manner, such that at each point in time the available resources match the current demand as closely as possible.

Now let us see how to integrate LVM with Hadoop and how to provide elasticity to DataNode storage.

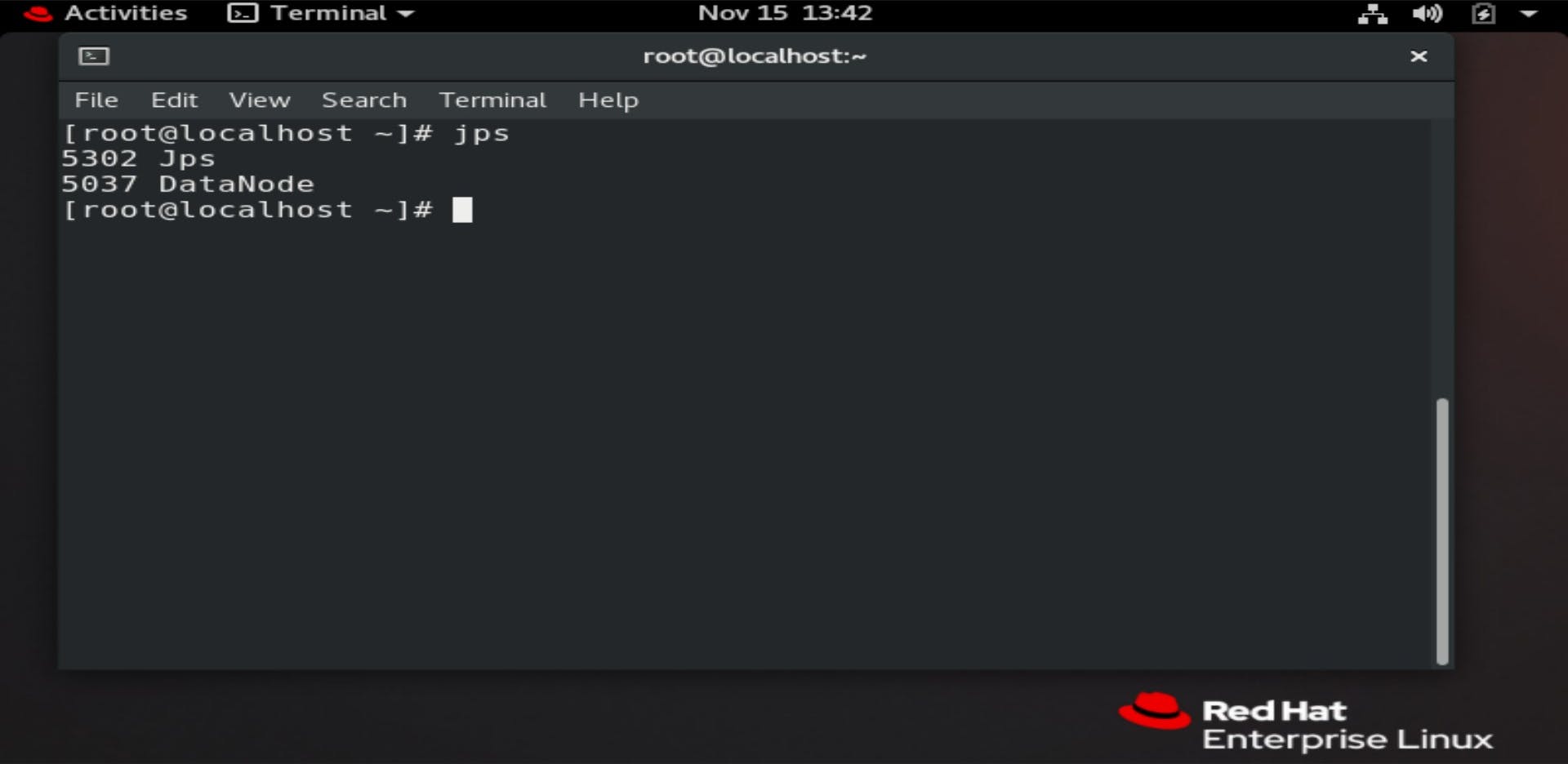

For this, we have used one NameNode and one DataNode both are from the local system.

Download Hadoop and JAVA (Java Oracle JDK Software)

and install, configure and start Daemon

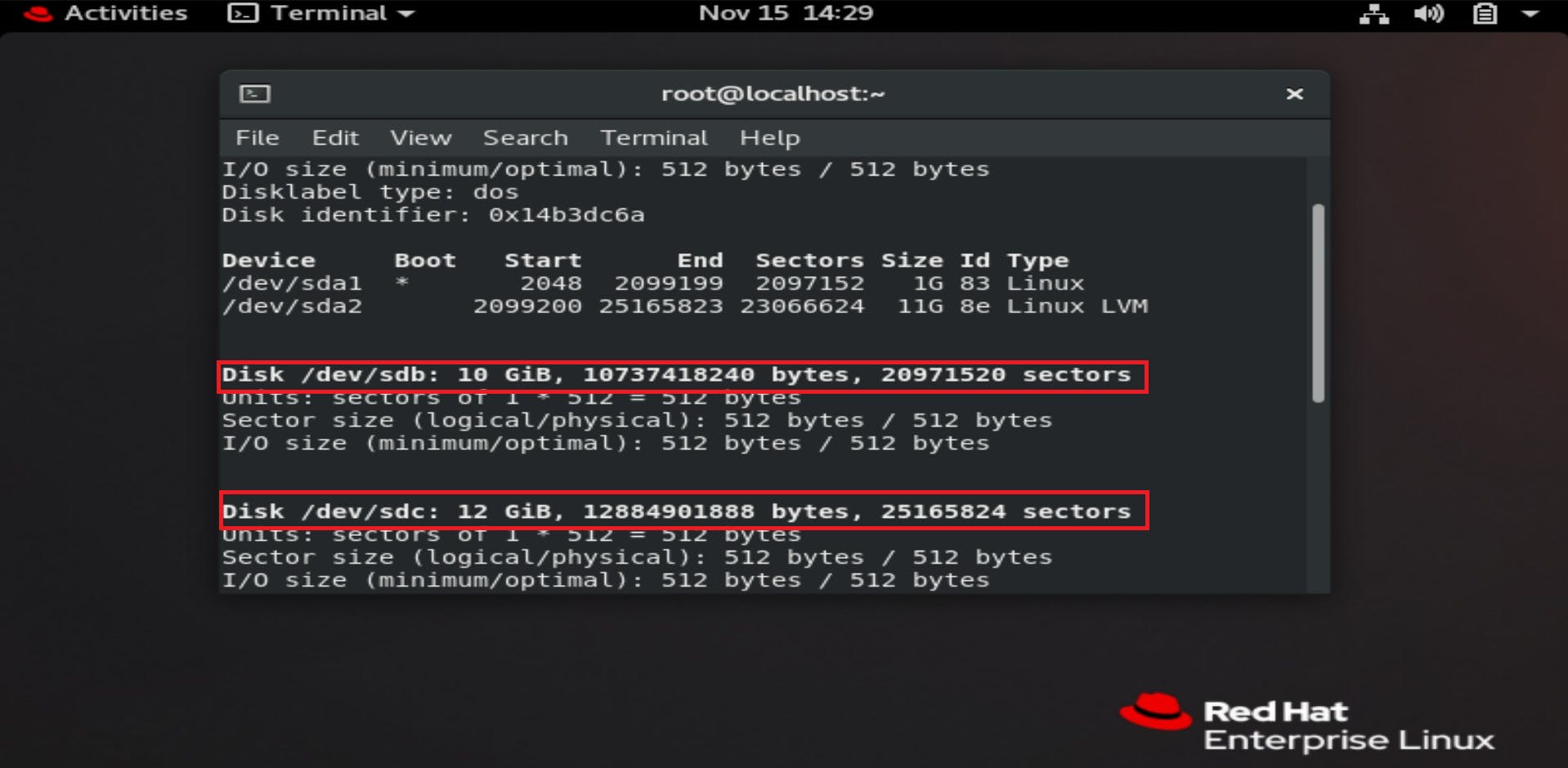

We added a physical Harddisk to our data node. We added two volumes both of 10GB but we wanted to share 15GB storage from data node to name node and there was one need came up that we required to extend the amount of storage shared by data node ..but with the help of the LVM concept we did this all task easily even extended partition.

fdisk -l

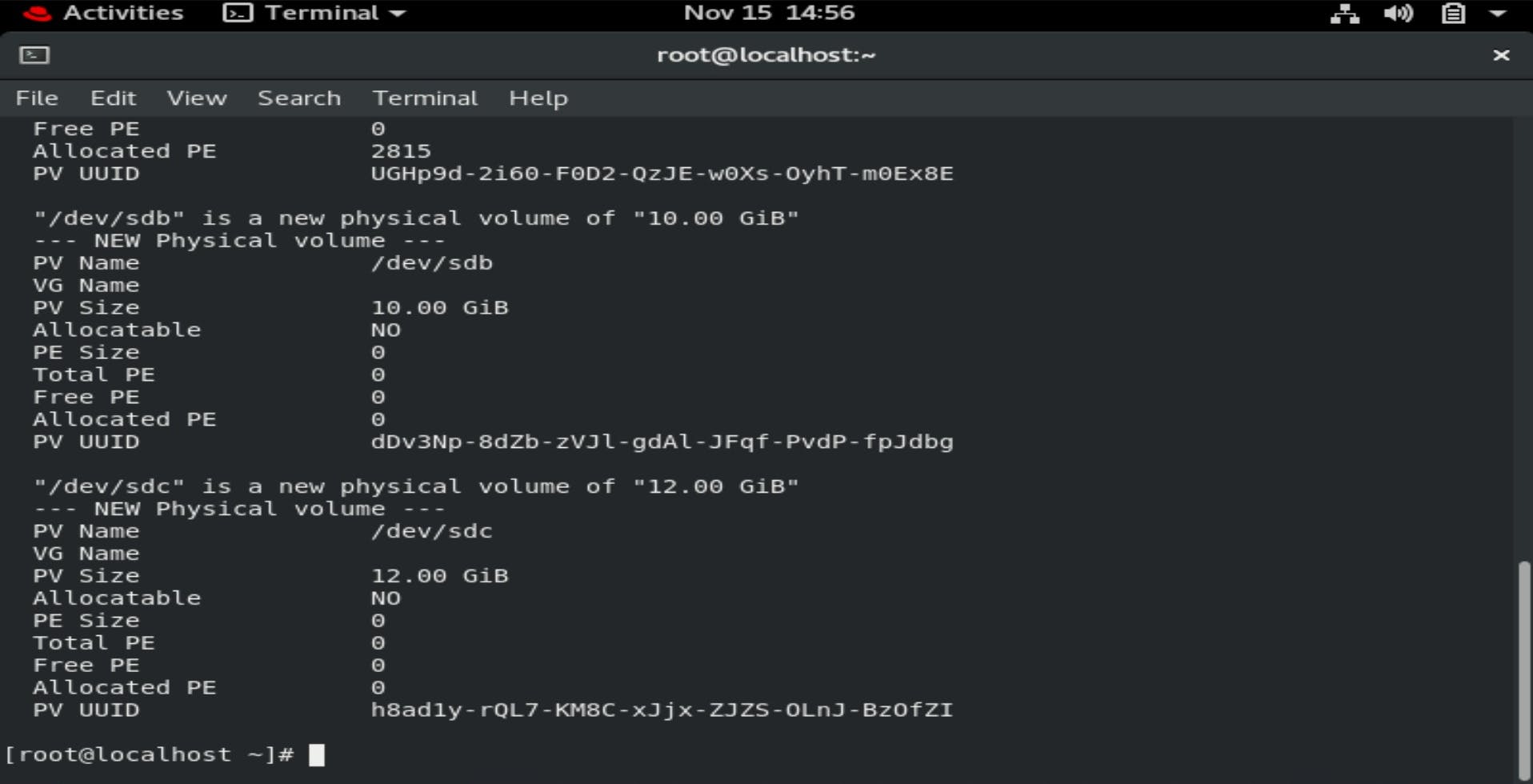

Here we have two disks they are /dev/sdb and /dev/sdc

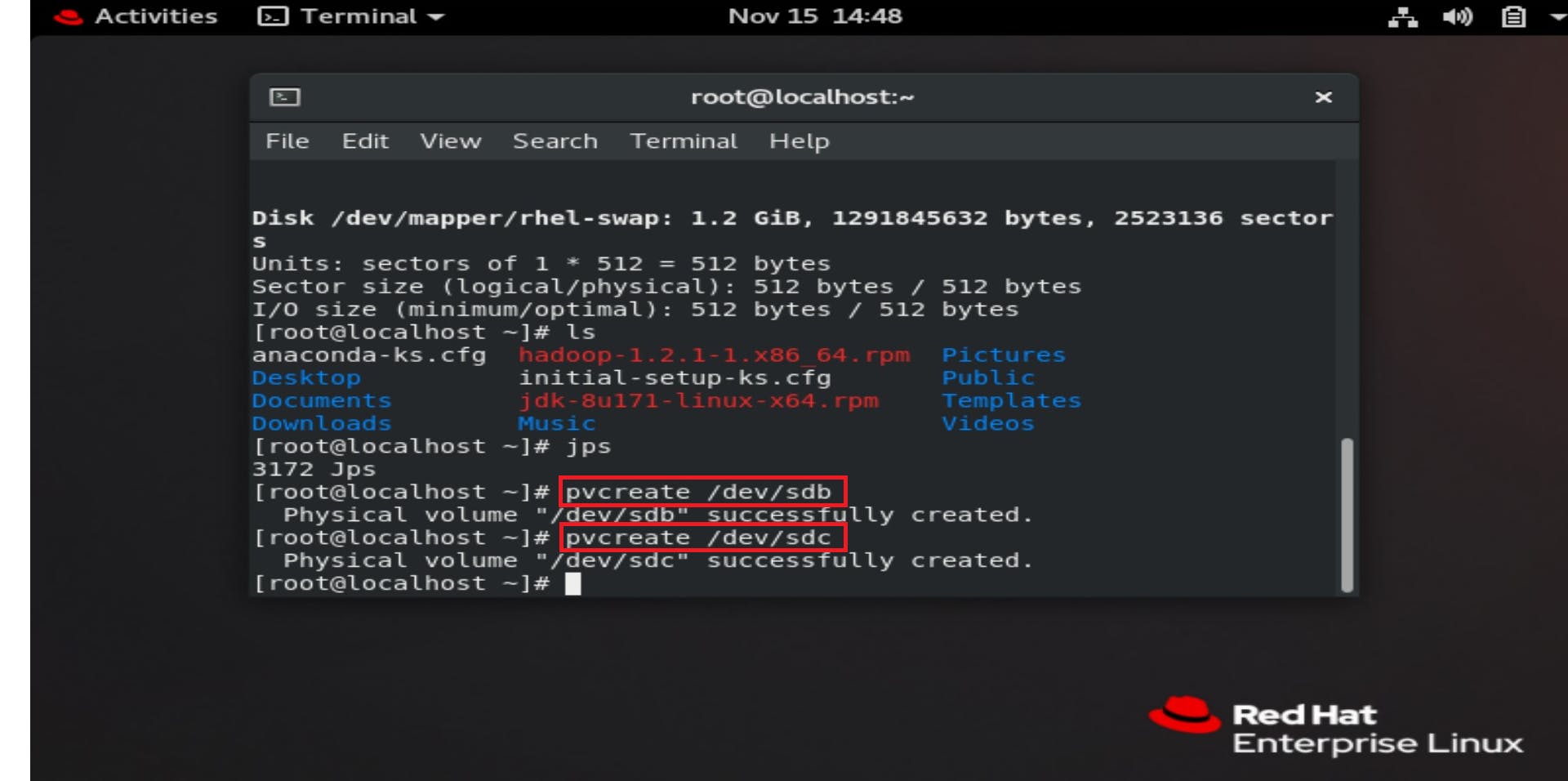

now, we have to create the physical volume of both the Disk using pvcreate /dev/sdb and pvcreate /dev/sdc

Now we can see our physical volume using pvdisplay command

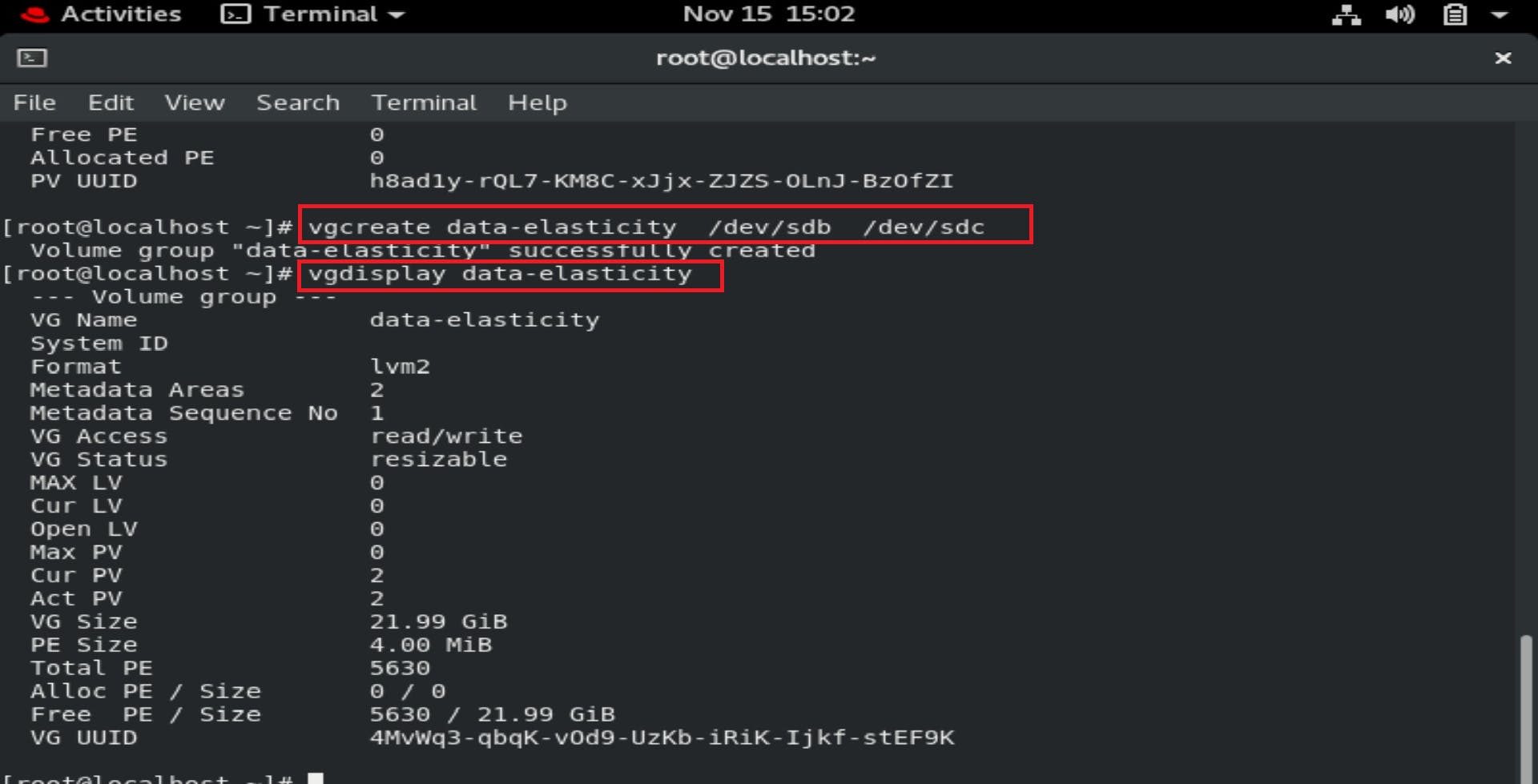

Now we have to make/create a volume group for the physical volume using vgcreate data-elasticity /dev/sdb /dev/sdc

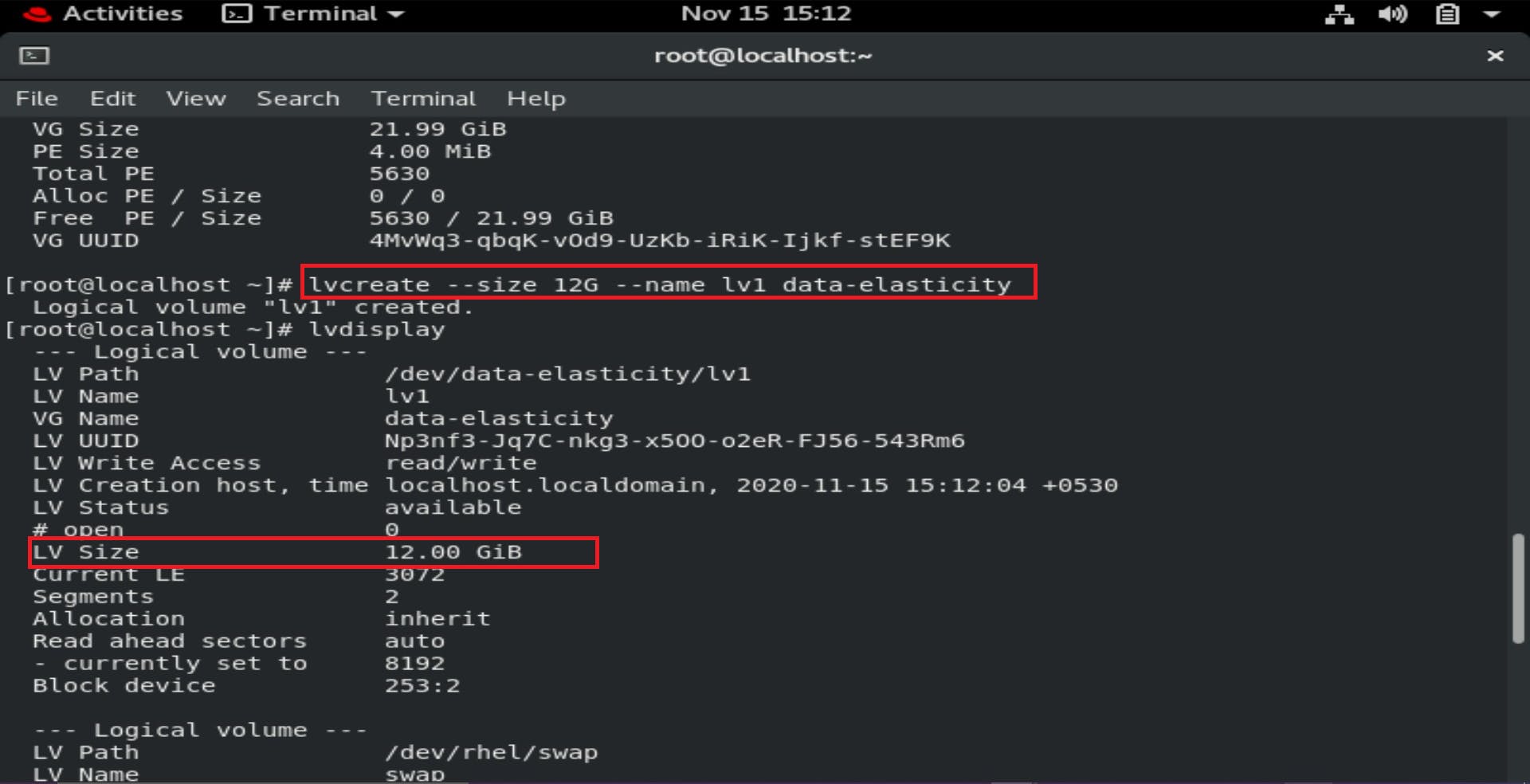

Now, we have to create a logical volume for implementation of LVM by using

Now, we have to create a logical volume for implementation of LVM by using lvcreate command

Since we have 22GiB of space in our volume group so we have created a logical volume of size 12 GiB.

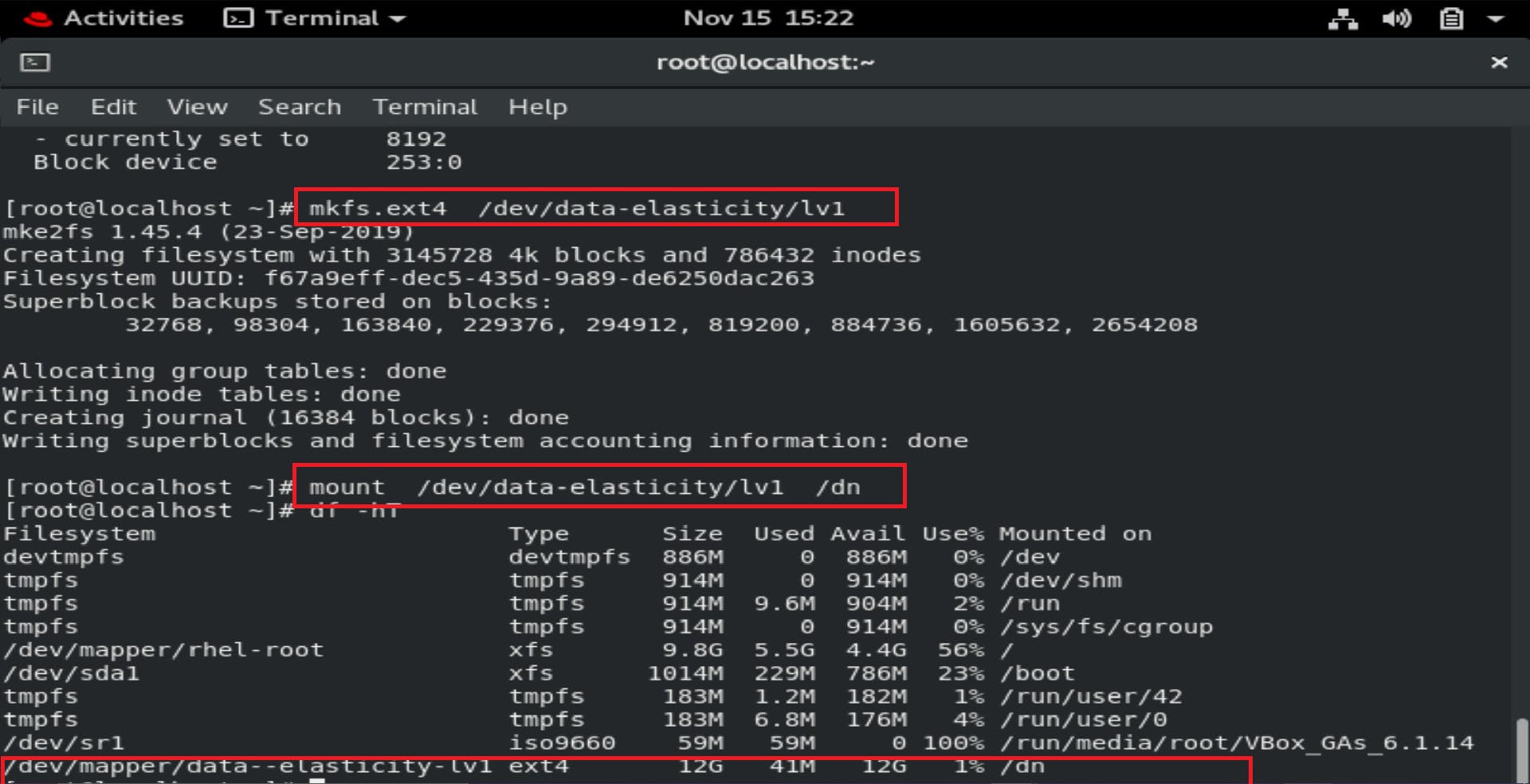

Now our logical volume is successfully created. So our next step is to format the Logical Volume and mount in the folder

Now our logical volume is successfully created. So our next step is to format the Logical Volume and mount in the folder /dn that contributing it storage to NameNode

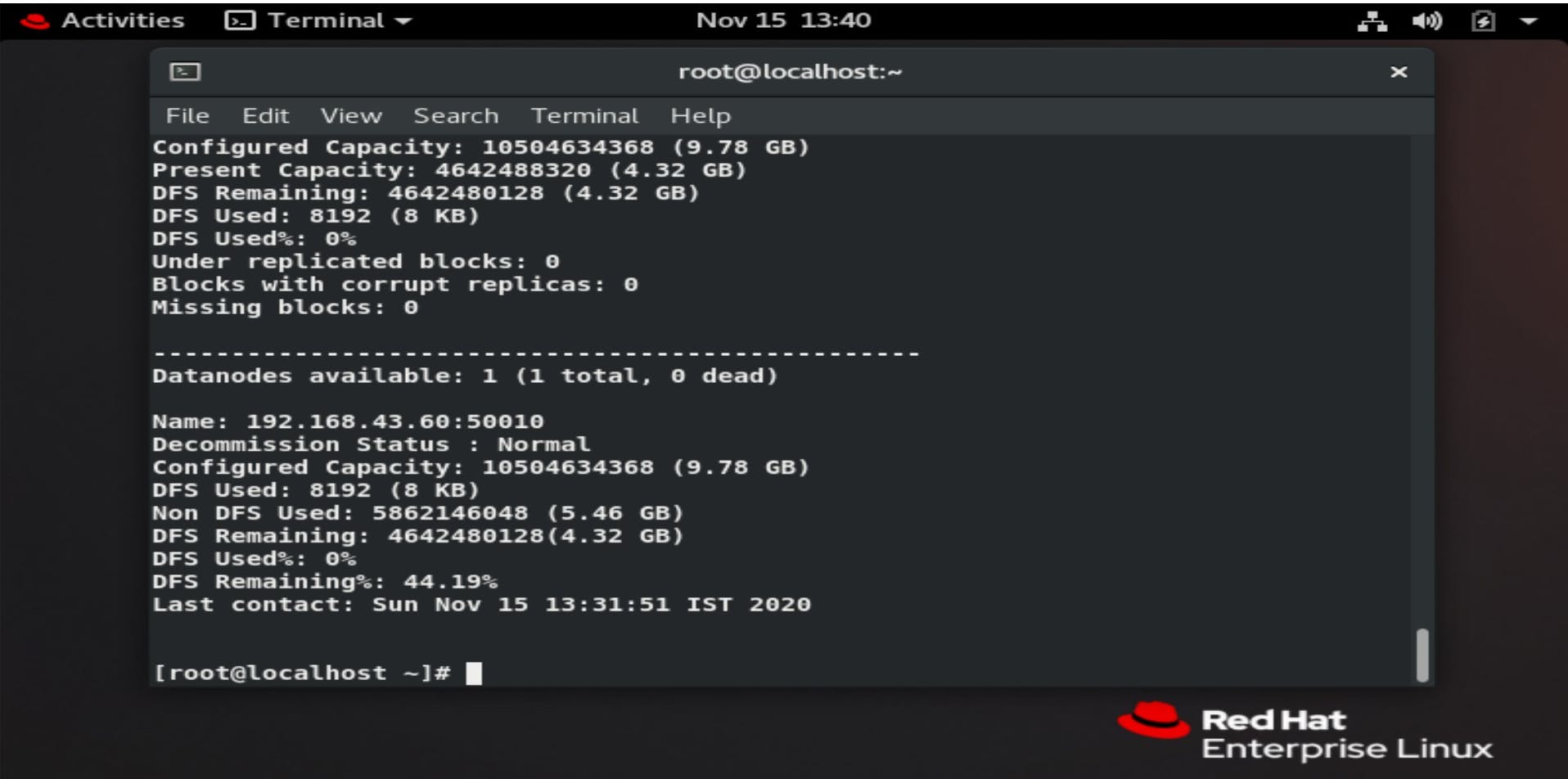

Let's check the

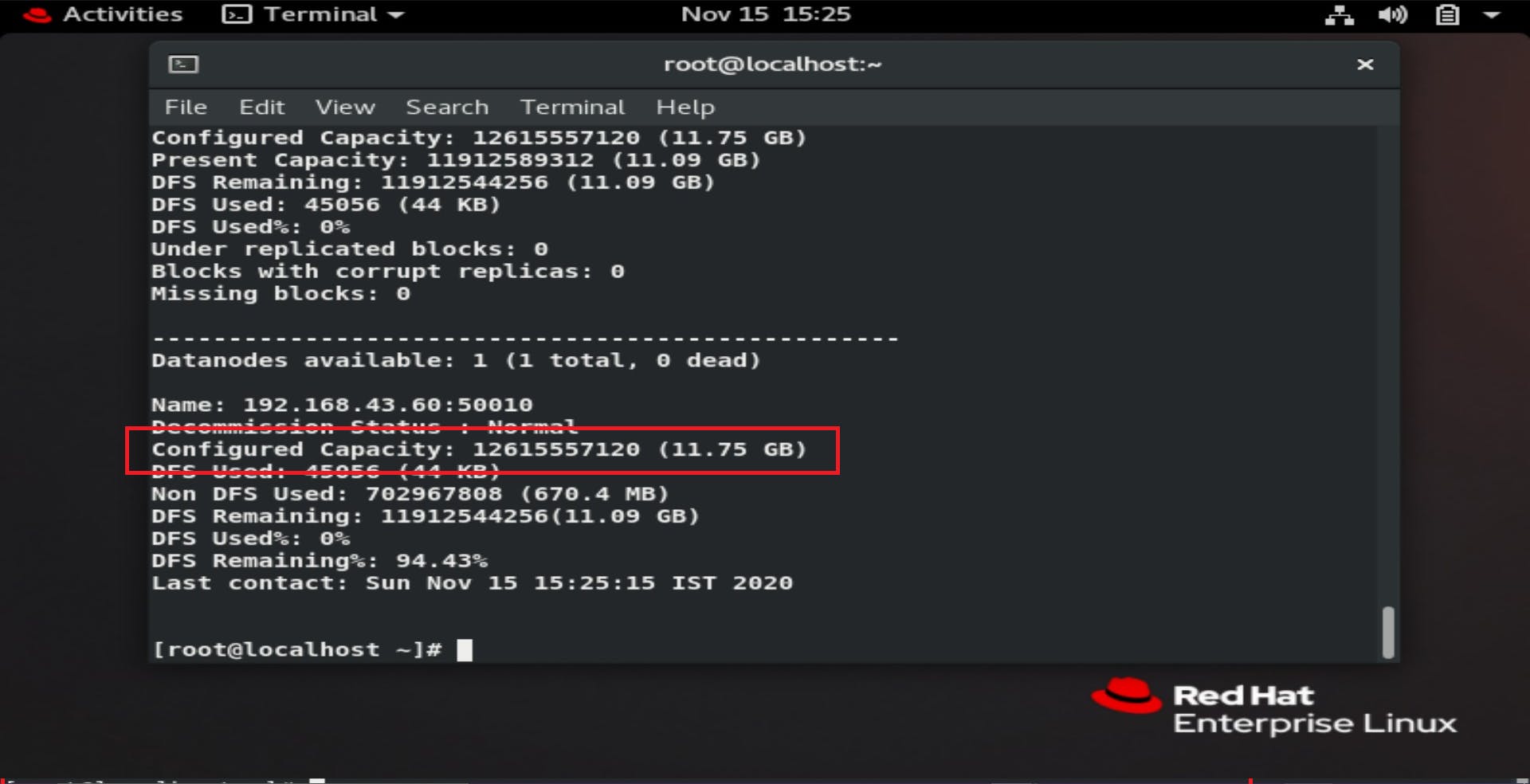

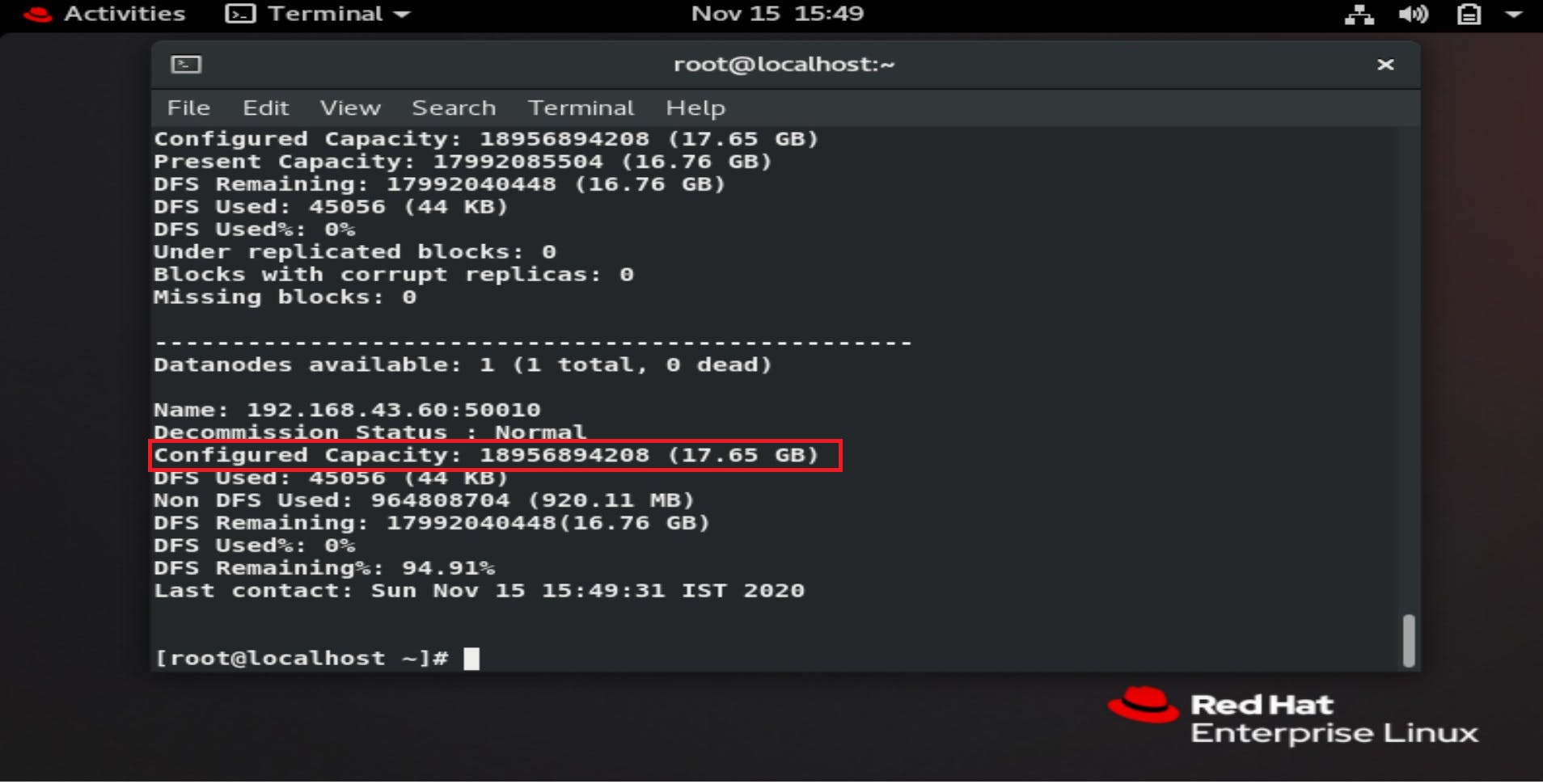

Let's check the hadoop dfsadmin -report

Providing elasticity to DataNode

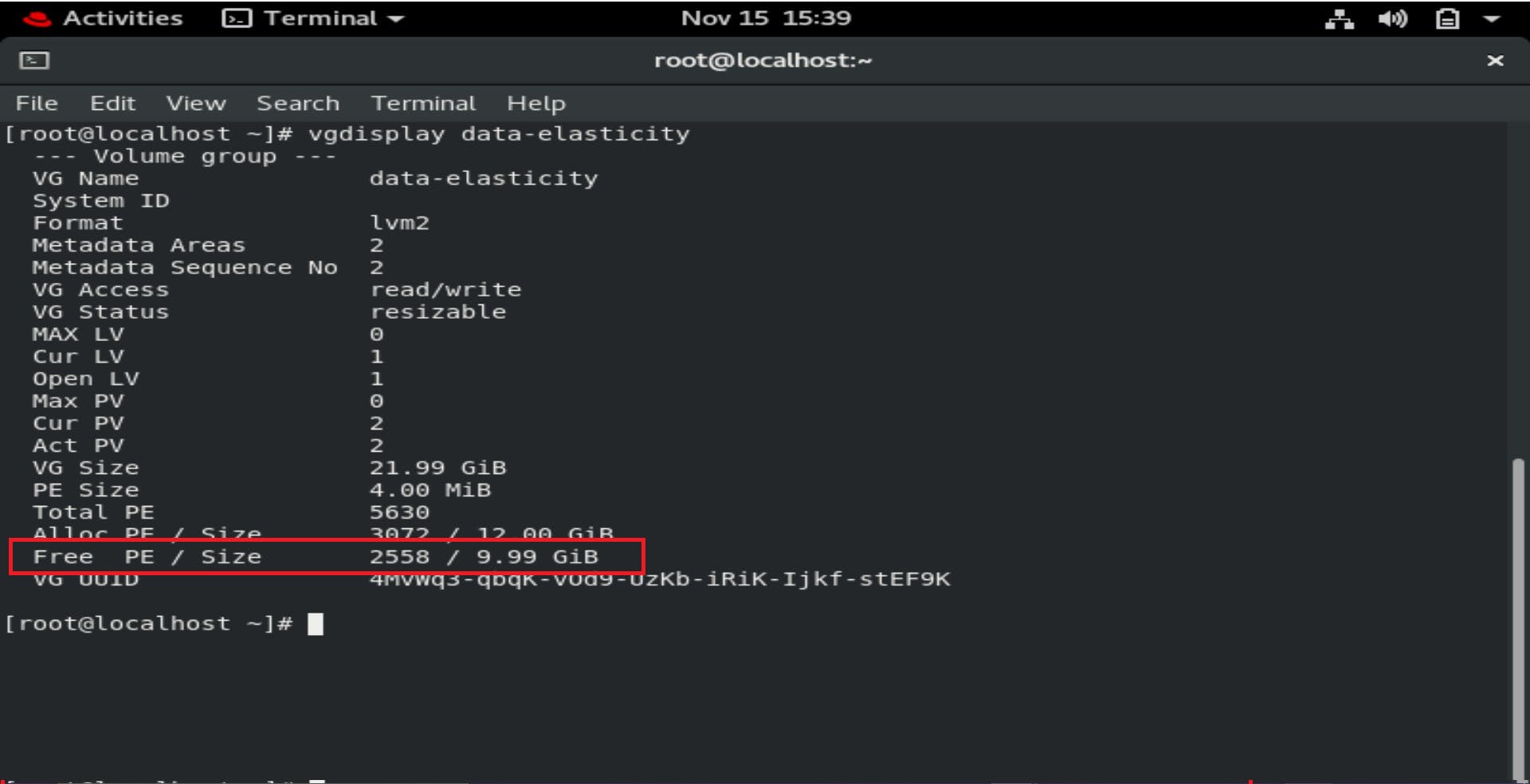

As we have 10GiB space is free in our volume group so we have increased/decreased the size of lv1 to provide elasticity to DataNode.

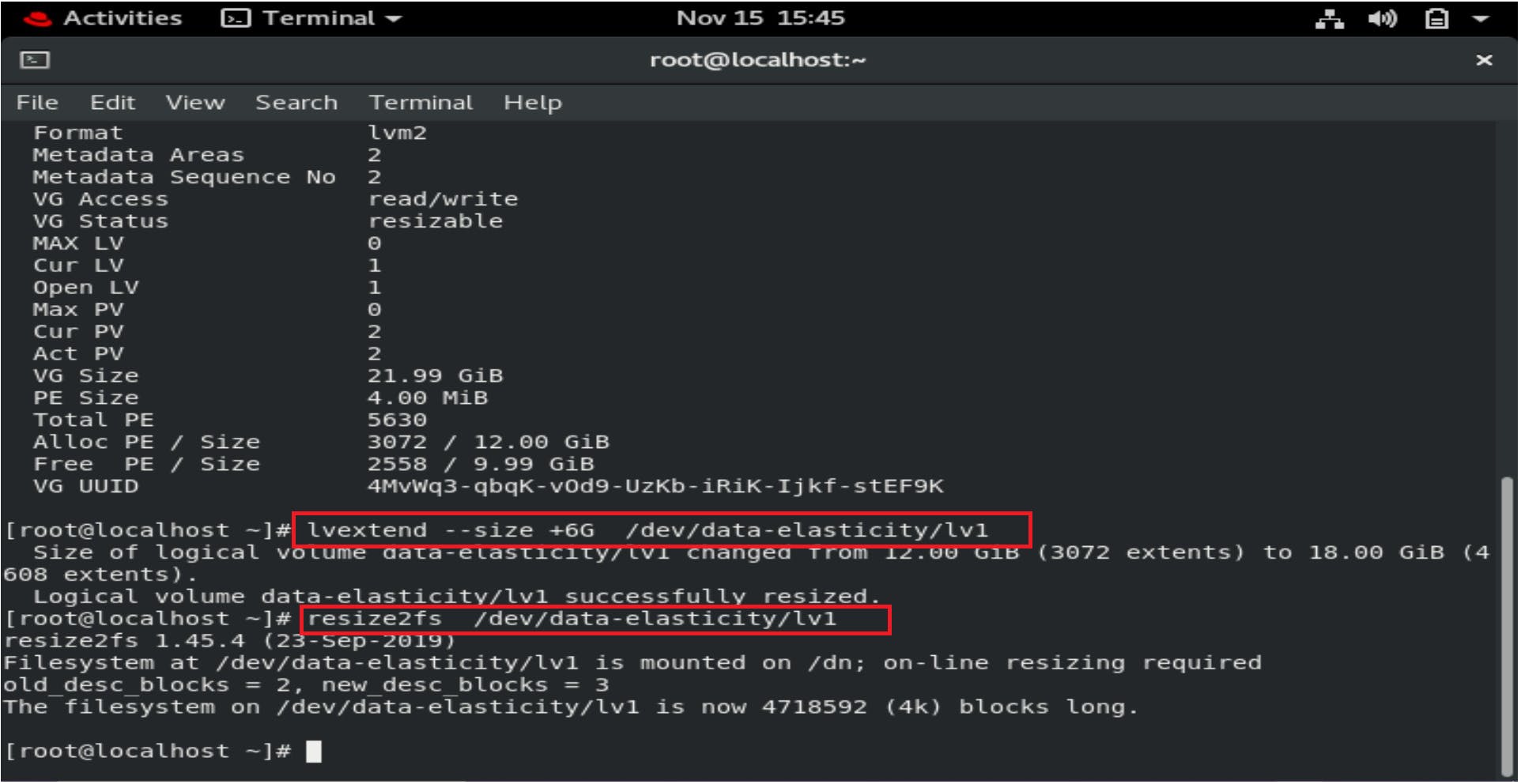

Let us now extend our Logical Volume(lv1) by 6GiB so that our Data node now can contribute 18GiB from 12GiB of storage to NameNode.

Let us now extend our Logical Volume(lv1) by 6GiB so that our Data node now can contribute 18GiB from 12GiB of storage to NameNode.

Now check

Now check hadoop dfsadmin -report in NameNode we get

Congratulation we increase the contribution size of the DataNode from 12GiB to 18GiB. This is only possible due to the Logical Volume Management Concept.